Winutils Exe Hadoop S

- Running Spark applications on Windows in general is no different than running it on other. Could not locate executable null bin winutils.exe in the Hadoop.

- Initialization of the class Shell on the client required 'wintuil.exe', even so the executable is not used afterwards. The following exception is shown in the log: 10:02:13,492 [main] ERROR Shell: Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null bin winutils.exe in the Hadoop.

It's happened because Hadoop config is initialized each time when spark context is created regardless is hadoop required or not. I propose to add some special flag to indicate if hadoop config is required (or start this configuration manually).

I am getting the following error while starting namenode for latest hadoop-2.2 release. I didn't find winutils exe file in hadoop bin folder. I tried below commands

15 Answers

Simple Solution :Download it from here and add to $HADOOP_HOME/bin

(Source :Click here)

EDIT:

For hadoop-2.6.0 you can download binaries from Titus Barik blog >>.

I have not only needed to point HADOOP_HOME to extracted directory [path], but also provide system property -Djava.library.path=[path]bin to load native libs (dll).

If we directly take the binary distribution of Apache Hadoop 2.2.0 release and try to run it on Microsoft Windows, then we'll encounter ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path.

The binary distribution of Apache Hadoop 2.2.0 release does not contain some windows native components (like winutils.exe, hadoop.dll etc). These are required (not optional) to run Hadoop on Windows.

So you need to build windows native binary distribution of hadoop from source codes following 'BUILD.txt' file located inside the source distribution of hadoop. You can follow the following posts as well for step by step guide with screen shot

If you face this problem when running a self-contained local application with Spark (i.e., after adding spark-assembly-x.x.x-hadoopx.x.x.jar or the Maven dependency to the project), a simpler solution would be to put winutils.exe (download from here) in 'C:winutilbin'. Then you can add winutils.exe to the hadoop home directory by adding the following line to the code:

Source: Click here

The statementjava.io.IOException: Could not locate executable nullbinwinutils.exe

explains that the null is received when expanding or replacing an Environment Variable. If you see the Source in Shell.Java in Common Package you will find that HADOOP_HOME variable is not getting set and you are receiving null in place of that and hence the error.

So, HADOOP_HOME needs to be set for this properly or the variable hadoop.home.dir property.

Hope this helps.

Thanks,Kamleshwar.

I just ran into this issue while working with Eclipse. In my case, I had the correct Hadoop version downloaded (hadoop-2.5.0-cdh5.3.0.tgz), I extracted the contents and placed it directly in my C drive. Then I went to

Eclipse->Debug/Run Configurations -> Environment (tab) -> and added

variable: HADOOP_HOME

Value: C:hadoop-2.5.0-cdh5.3.0

You can download winutils.exe here: http://public-repo-1.hortonworks.com/hdp-win-alpha/winutils.exe

Then copy it to your HADOOP_HOME/bin directory.

winutils.exe are required for hadoop to perform hadoop related commands. please download hadoop-common-2.2.0 zip file. winutils.exe can be found in bin folder. Extract the zip file and copy it in the local hadoop/bin folder.

I was facing the same problem. Removing the bin from the HADOOP_HOME path solved it for me. The path for HADOOP_HOME variable should look something like.

System restart may be needed. In my case, restarting the IDE was sufficient.

Set up HADOOP_HOME variable in windows to resolve the problem.

You can find answer in org/apache/hadoop/hadoop-common/2.2.0/hadoop-common-2.2.0-sources.jar!/org/apache/hadoop/util/Shell.java :

IOException from

HADOOP_HOME_DIR from

In Pyspark, to run local spark application using Pycharm use below lines

Winutils Spark

I was getting the same issue in windows. I fixed it by

- Downloading hadoop-common-2.2.0-bin-master from link.

- Create a user variable HADOOP_HOME in Environment variable and assign the path of hadoop-common bin directory as a value.

- You can verify it by running hadoop in cmd.

- Restart the IDE and Run it.

Download desired version of hadoop folder (Say if you are installing spark on Windows then hadoop version for which your spark is built for) from this link as zip.

Extract the zip to desired directory.You need to have directory of the form hadoopbin (explicitly create such hadoopbin directory structure if you want) with bin containing all the files contained in bin folder of the downloaded hadoop. This will contain many files such as hdfs.dll, hadoop.dll etc. in addition to winutil.exe.

Now create environment variableHADOOP_HOME and set it to <path-to-hadoop-folder>hadoop. Then add;%HADOOP_HOME%bin; to PATH environment variable.

Open a 'new command prompt' and try rerunning your command.

- Download [winutils.exe]

From URL :

https://github.com/steveloughran/winutils/hadoop-version/bin - Past it under HADOOP_HOME/bin

Note : You should Set environmental variables:

User variable:

Variable: HADOOP_HOME

Value: Hadoop or spark dir

I used 'hbase-1.3.0' and 'hadoop-2.7.3' versions. Setting HADOOP_HOME environment variable and copying 'winutils.exe' file under HADOOP_HOME/bin folder solves the problem on a windows os.Attention to set HADOOP_HOME environment to the installation folder of hadoop(/bin folder is not necessary for these versions).Additionally I preferred using cross platform tool cygwin to settle linux os functionality (as possible as it can) because Hbase team recommend linux/unix env.

Winutils.exe is used for running the shell commands for SPARK. When you need to run the Spark without installing Hadoop, you need this file.

Steps are as follows:

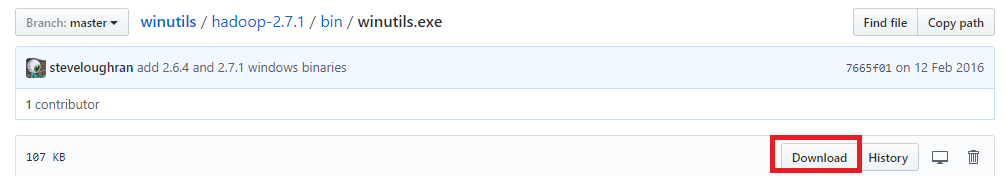

Download the winutils.exe from following location for hadoop 2.7.1https://github.com/steveloughran/winutils/tree/master/hadoop-2.7.1/bin[NOTE: If you are using separate hadoop version then please download the winutils from corresponding hadoop version folder on GITHUB from the location as mentioned above.]

Now, create a folder 'winutils' in C: drive. Now create a folder 'bin' inside folder 'winutils' and copy the winutils.exe in that folder. So the location of winutils.exe will be C:winutilsbinwinutils.exe

Now, open environment variable and set HADOOP_HOME=C:winutil[NOTE: Please do not addbin in HADOOP_HOME and no need to set HADOOP_HOME in Path]

Your issue must be resolved !!

Not the answer you're looking for? Browse other questions tagged hadoop or ask your own question.

Build your own Hadoop distribution in order to make it run on Windows 7 with this in-depth tutorial.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

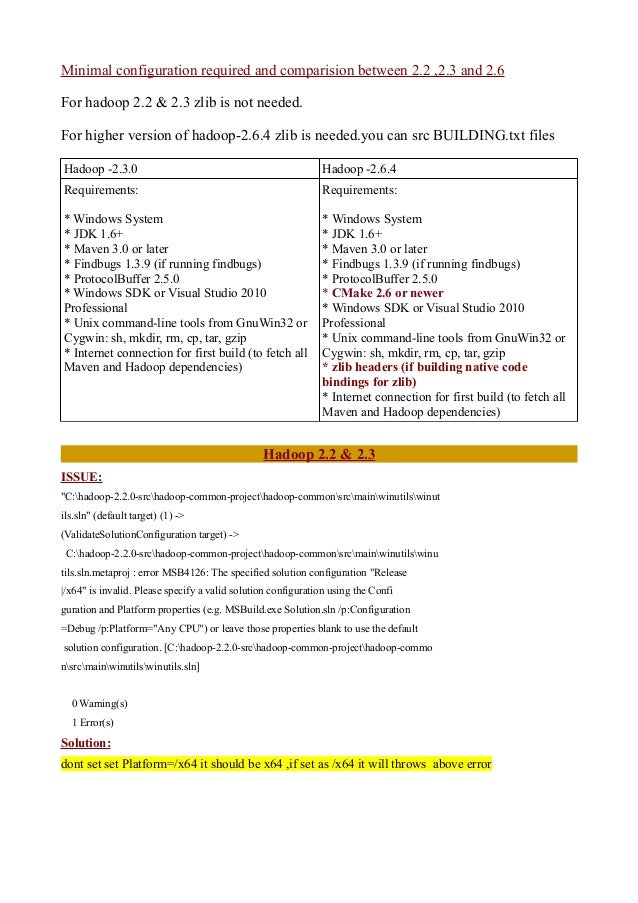

I have searched on Google and found that Hadoop provides native Windows support from version 2.2 and above, but for that we need to build it on our own, as official Apache Hadoop releases do not provide native Windows binaries. So this tutorial aims to provide a step by step guide to Build Hadoop binary distribution from Hadoop source code on Windows OS. This article will also provide instructions to setup Java, Maven, and other required components. Apache Hadoop is an open source Java project, mainly used for distributed storage and large data processing. It is designed to scale horizontally on the go and to support distributed processing on multiple machines. You can find more about Hadoop at http://hadoop.apache.org/.

Author's GitHub

I have created a bunch of Spark-Scala utilities at https://github.com/gopal-tiwari, might be helpful in some other cases.

Solution for Spark Errors

Many of you may have tried running Spark on Windows OS and faced an error in the console (shown below). This is because your Hadoop distribution does not contain native binaries for Windows OS, as they are not included in the official Hadoop Distribution. So you need to build Hadoop from its source code on your Windows OS.

Solution for Hadoop Error

This error is also related to the Native Hadoop Binaries for Windows OS. So the solution is the same as the above Spark problem, in that you need to build it for your Windows OS from Hadoop's source code.

So just follow this article and at the end of the tutorial you will be able to get rid of these errors by building a Hadoop distribution.

For this article I’m following the official Hadoop building guide at https://svn.apache.org/viewvc/hadoop/common/branches/branch-2/BUILDING.txt

Downloading the Required Files

Download Links

Download Hadoop source from http://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-2.7.2/hadoop-2.7.2-src.tar.gz

Download Microsoft .NET Framework 4 (Standalone Installer) from https://www.microsoft.com/en-in/download/details.aspx?id=17718

Download Windows SDK 7 Installer from https://www.microsoft.com/en-in/download/details.aspx?id=8279, or you can also use offline installer ISO from https://www.microsoft.com/en-in/download/details.aspx?id=8442. You will find 3 different ISOs to download:

Manual Calculation may give you the correct solution, but in today's world of ISM, the faster you get information the easier to make informed decision. Download page. ITEMS, SIZE, ACTION. Chart Catalogue Updates - Updated weekly. Vessel Files (vsl format). 18Mb, LoadMan Ver. Deckmaster marine. Mar 13, 2018 - Over 508 users downloaded software by Deckmaster Marine Softwares, Inc. See developer information and full list of programs. LoadMan, Free. Deckmaster Marine, Manila, Philippines. Deckmaster Marine software is a software company that develops marine software particularly ships. NOAA Charts, 28, 2018, Download NOAA Charts Dates of Latest Editions. (This location depends on where you install the BridgeMan for Windows software).

GRMSDK_EN_DVD.iso (x86)

GRMSDKX_EN_DVD.iso (AMD64)

GRMSDKIAI_EN_DVD.iso (Itanium)

Please choose based on your OS type.

Download JDK according to your OS & CPU architecture from http://www.oracle.com/technetwork/java/javase/downloads/index.html

Download and install 7-zip from http://www.7-zip.org/download.html

Download and extract Maven 3.0 or later from https://maven.apache.org/download.cgi

Download ProtocolBuffer 2.5.0 from https://github.com/google/protobuf/releases/download/v2.5.0/protoc-2.5.0-win32.zip

Download CMake 3.5.0 or higher from https://cmake.org/download/

Download Cygwin installer from https://cygwin.com/

NOTE: We can use Windows 7 or later for building Hadoop. In my case I have used Windows Server 2008 R2.

NOTE: I have used a freshly installed OS and removed all .NET Framework and C++ redistributables from the machine as they will be getting installed with Windows SDK 7.1. We are also going to install .NET Framework 4 in this tutorial. If you have any Visual Studio versions installed on your machine then this is likely to cause issues in the build process because of version mismatch of some .NET components and in some cases will not allow you to install Windows SDK 7.1.

Installation

A. JDK

1. Start JDK installation, click next, leave installation path as default, and proceed.

2. Again, leave the installation path as default for JRE if it asks, click next to install, and then click close to finish the installation.

3. If you didn't change the path during installation, your Java installation path will be something like 'C:Program FilesJavajdk1.8.0_65'.

4. Now right click on My Computer and select Properties, then click on Advanced or go to Control Panel > System > Advanced System Settings.

5. Click the Environment Variables button.

6. Hit the New button in the System Variables section then type JAVA_HOME in the Variable name field and give your JDK installation path in the Variable value field.

a. If the path contains spaces, use the shortened path name, for example “C:Progra~1Javajdk1.8.0_74” for Windows 64-bit systems

i. Progra~1 for 'Program Files'

ii. Progra~2 for 'Program Files(x86)'

7. It should look like:

8. Now click OK.

9. Search for the Path variable in the “System Variable” section in the “Environment Variables” dialogue box you just opened.

10. Edit the path and type “;%JAVA_HOME%bin” at the end of the text already written there just like the image below:

11. To confirm your Java installation, just open cmd and type “java –version.” You should be able to see the version of Java you just installed.

If your command prompt somewhat looks like the image above, you are good to go. Otherwise, you need to recheck whether your setup version is matching with the OS architecture (x86, x64) or if the environment variables path is correct or not.

B. .NET Framework 4

1. Double-click on the downloaded offline installer of Microsoft .NET Framework 4. (i.e. dotNetFx40_Full_x86_x64.exe).

2. When prompted, accept the license terms and click the install button.

3. At the end, just click finish and it’s done.

C. Windows SDK 7

1. Now go to Uninstall Programs and Features windows from My Computer or Control Panel.

2. Uninstall all Microsoft Visual C++ Redistributables, if they got installed with the OS because they may be a newer version than the one which Win SDK 7.1 requires. During SDK installation they will cause errors.

3. Now open your downloaded Windows 7 SDK ISO file using 7zip and extract it to C:WinSDK7 folder. You can also mount it as a virtual CD drive if you have that feature.

You will have following files in your SDK folder:

5. Now open your Windows SDK folder and run setup.

6. Follow the instructions and install the SDK.

7. At the end when you get a window saying Set Help Library Manager, click cancel.

D. Maven

1. Now extract the downloaded Maven zip file to a C drive.

2. For this tutorial we are using Maven 3.3.3.

3. Now open the Environment Variables panel just like we did during JDK installation to set M2_HOME.

4. Create a new entry in System Variables and set the name as M2_HOME and value as your Maven path before the bin folder, for example: C:Maven-3.3.3. Just like the image below:

5. Now click OK.

6. Search for the Path variable in the “System Variable” section, click the edit button, and type “;%M2_HOME%bin” at the end of the text already written there just like the image below:

7. To confirm your Maven installation just open cmd and type “mvn –v.” You should be able to see what version of Maven you just installed.

If your command prompt looks like the image above, you are done with Maven.

E. Protocol Buffer 2.5.0

1. Extract Protocol Buffer zip to C:protoc-2.5.0-win32.

2. Now we need to add in the “Path” variable in the Environment System Variables section, just like the image below:

3. To check if the protocol buffer installation is working fine just type command “protoc --version”

Your command prompt should look like this.

F. Cygwin

1. Download Cygwin according to your OS architecture

a. 64 bit (setup-x86_64.exe)

b. 32 bit (setup-x86.exe)

2. Start Cygwin installation and choose 'Install from Internet' when it asks you to choose a download source, then click next.

3. Follow the instructions further and choose any Download site when prompted. If it fails, try any other site from the list and click next:

4. Once the download is finished, it will show you the list of default packages to be installed. According to the Hadoop official build instructions (https://svn.apache.org/viewvc/hadoop/common/branches/branch-2/BUILDING.txt?view=markup) we only need six packages: sh, mkdir, rm, cp, tar, gzip. For the sake of simplicity, just click next and it will download all the default packages.

5. Once the installation is finished, we need to set the Cygwin installation folder to the System Environment Variable 'Path.' To do that, just open the Environment Variables panel.

6. Edit the Path variable in the “System Variable” section, add a semicolon, and then paste your Cygwin installation path into the bin folder. Just like the image below:

7. You can verify Cygwin installation just by running command “uname -r” or “uname -a” as shown below:

G. CMake

1. Run the downloaded CMake installer.

2. On the below screen make sure you choose “Add CMake to the PATH for all users.”

3. Now click Next and follow the instructions to complete the installation.

4. To check that the CMake installation is correct, open a new command prompt and type “cmake –version.” You will be able to see the cmake version installed.

Building Hadoop

1. Open your downloaded Hadoop source code file, i.e. hadoop-2.7.2-src.tar.gz with 7zip.

2. Inside that you will find 'hadoop-2.7.2-src.tar' — double click on that file.

3. Now you will be able to see Hadoop-2.7.2-src folder. Open that folder and you will be able to see the source code as shown here:

4. Now click Extract and give a short path like C:hdp and click OK. If you give a long path you may get a runtime error due to Windows' maximum path length limitation.

5. Once the extraction is finished we need to add a new “Platform” System Variable. The values for the platform will be:

a. x64 (for 64-bit OS)

b. Win32 (for 32-bit OS)

Please note that the variable name Platform is case sensitive. So do not change lettercase.

6. To add a Platform in the System variable, just open the Environment variables dialogue box, click on the “New…” button in the System variable section, and fill the Name and Value text boxes as shown below:

7. So before we proceed with the build, just have a look on the state of all installed programs on my machine:

8. Also check the structure of Hadoop sources extracted on the C drive:

9. Open a Windows SDK 7.1 Command Prompt window from Start --> All Programs --> Microsoft Windows SDK v7.1, and click on Windows SDK 7.1 Command Prompt.

10. Change the directory to your extracted Hadoop source folder. For this tutorial its C:hdp by typing the command cd C:hdp.

11. Now type command mvn package -Pdist,native-win -DskipTests -Dtar

NOTE: You need a working internet connection as Maven will try to download all required dependencies from online repositories.

12. If everything goes smoothly it will take around 30 minutes. It depends upon your Internet connection and CPU speed.

13. If everything goes well you will see a success message like the below image. Your native Hadoop distribution will be created at C:hdphadoop-disttargethadoop-2.7.2

14. Now open C:hdphadoop-disttargethadoop-2.7.2. You will find “hadoop-2.7.2.tar.gz”. This Hadoop distribution contains native Windows binaries and can be used on a Windows OS for Hadoop clusters.

15. For running a Hadoop instance you need to change some configuration files like hadoop-env.cmd, core-site.xml,hdfs-site.xml, slaves, etc. For those changes please follow this official link to setup and run hadoop on windows: https://wiki.apache.org/hadoop/Hadoop2OnWindows.

Like This Article? Read More From DZone

Free DZone Refcard

Software Usage Analytics for>